Loading...

Searching...

No Matches

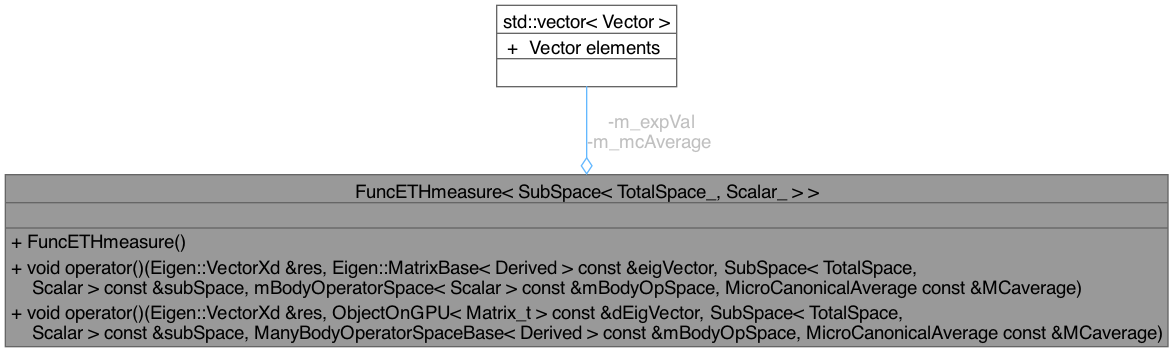

FuncETHmeasure< SubSpace< TotalSpace_, Scalar_ > > Class Template Reference

#include <ETHmeasure.hpp>

Collaboration diagram for FuncETHmeasure< SubSpace< TotalSpace_, Scalar_ > >:

Public Member Functions | |

| FuncETHmeasure () | |

| template<class Derived > | |

| void | operator() (Eigen::VectorXd &res, Eigen::MatrixBase< Derived > const &eigVector, SubSpace< TotalSpace, Scalar > const &subSpace, mBodyOperatorSpace< Scalar > const &mBodyOpSpace, MicroCanonicalAverage const &MCaverage) |

| template<typename Matrix_t , class Derived > | |

| void | operator() (Eigen::VectorXd &res, ObjectOnGPU< Matrix_t > const &dEigVector, SubSpace< TotalSpace, Scalar > const &subSpace, ManyBodyOperatorSpaceBase< Derived > const &mBodyOpSpace, MicroCanonicalAverage const &MCaverage) |

Private Types | |

| using | TotalSpace = TotalSpace_ |

| using | Scalar = Scalar_ |

| using | Real = typename SubSpace< TotalSpace_, Scalar_ >::Real |

| using | Vector = Eigen::VectorXd |

Private Attributes | |

| std::vector< Vector > | m_expVal |

| std::vector< Vector > | m_mcAverage |

Member Typedef Documentation

◆ Real

template<class TotalSpace_ , typename Scalar_ >

|

private |

◆ Scalar

template<class TotalSpace_ , typename Scalar_ >

|

private |

◆ TotalSpace

template<class TotalSpace_ , typename Scalar_ >

|

private |

◆ Vector

template<class TotalSpace_ , typename Scalar_ >

|

private |

Constructor & Destructor Documentation

◆ FuncETHmeasure()

template<class TotalSpace_ , typename Scalar_ >

|

inline |

147 debug_constructor_printf(1);

148 }

std::vector< Vector > m_mcAverage

Definition ETHmeasure.hpp:143

std::vector< Vector > m_expVal

Definition ETHmeasure.hpp:142

Member Function Documentation

◆ operator()() [1/2]

template<class TotalSpace_ , typename Scalar_ >

template<class Derived >

| void FuncETHmeasure< SubSpace< TotalSpace_, Scalar_ > >::operator() | ( | Eigen::VectorXd & | res, |

| Eigen::MatrixBase< Derived > const & | eigVector, | ||

| SubSpace< TotalSpace, Scalar > const & | subSpace, | ||

| mBodyOperatorSpace< Scalar > const & | mBodyOpSpace, | ||

| MicroCanonicalAverage const & | MCaverage | ||

| ) |

171 {

173 << ": eigVector is NOT on GPU. Using CPU algorithm...");

174

175 mBodyOpSpace.computeTransEqClass();

180 res = Eigen::VectorXd::Zero(subSpace.dim());

181 if(res.norm() > 1.0e-4) {

182 std::cerr << "Error(" << __func__

183 << ") : failed to initialize res: res.norm() = " << res.norm() << " is too large."

184 << std::endl;

185 std::exit(EXIT_FAILURE);

186 }

188 [&eigVector](auto& x) { x.resize(eigVector.cols()); });

189

190 debug_print(eigVector);

193

194 omp_set_max_active_levels(1);

195// #pragma omp parallel for reduction(+ : res)

198 int thread = omp_get_thread_num();

199

200 m_expVal[thread]

201 = (eigVector.adjoint()

203 .pruned()

204 .eval()

205 * eigVector)

206 .diagonal()

207 .real();

210

211 res += mBodyOpSpace.transPeriod(opEqClass)

213 }

215 << ": eigVector is NOT on GPU. Using CPU algorithm...");

216}

__host__ __device__ Vector_t const & transPeriod() const

Definition HilbertSpace.hpp:317

__host__ __device__ int transEqDim() const

Definition HilbertSpace.hpp:309

__host__ __device__ void computeTransEqClass() const

Definition HilbertSpace.hpp:357

__host__ __device__ Vector_t const & transEqClassRep() const

Definition HilbertSpace.hpp:311

__host__ void basisOp(Eigen::SparseMatrix< ScalarType > &res, int opNum) const

Definition OperatorSpace.hpp:104

__host__ __device__ SparseCompressed adjoint() const

Definition MatrixUtils.cuh:430

debug_print("# Determining GPU configuration.")

◆ operator()() [2/2]

template<class TotalSpace_ , typename Scalar_ >

template<typename Matrix_t , class Derived >

| void FuncETHmeasure< SubSpace< TotalSpace_, Scalar_ > >::operator() | ( | Eigen::VectorXd & | res, |

| ObjectOnGPU< Matrix_t > const & | dEigVector, | ||

| SubSpace< TotalSpace, Scalar > const & | subSpace, | ||

| ManyBodyOperatorSpaceBase< Derived > const & | mBodyOpSpace, | ||

| MicroCanonicalAverage const & | MCaverage | ||

| ) |

225 {

226

227 // dEigVector should be stored in row-major.

228

230 << ": dEigVector is on GPU. (Algorithm is NOT implemented)");

231 int nGPUs;

232 cuCHECK(cudaGetDeviceCount(&nGPUs));

233 mBodyOpSpace.computeTransEqClass();

234 std::cout << "FuncETHmeasure(): nGPUs = " << nGPUs

238 res = Eigen::VectorXd::Zero(subSpace.dim());

239 if(res.norm() > 1.0e-4) {

240 std::cerr << "Error(" << __func__

241 << ") : failed to initialize res: res.norm() = " << res.norm() << " is too large."

242 << std::endl;

243 std::exit(EXIT_FAILURE);

244 }

247 size_t const requiredSmSize = expValMemSize + eigValMemSize;

248

249 // GPU-side preparation

250 cudaDeviceProp deviceProp;

251 cudaGetDeviceProperties(&deviceProp, 0);

252

253 ObjectOnGPU< Eigen::MatrixX<Real> > dRes(

254 Eigen::MatrixX<Real>::Zero(subSpace.dim(), deviceProp.multiProcessorCount).eval());

255 ObjectOnGPU< SubSpace<TotalSpace, Scalar> > dSubSpace(subSpace);

257 ObjectOnGPU< Eigen::VectorX<Real> > dEigVal(MCaverage.eigVal());

258 ObjectOnGPU< Eigen::MatrixX<int> > dWork;

260 int* transEqClassRep = nullptr;

261 int* transPeriod = nullptr;

268

269 void (*m_kernel)(

270 Eigen::DenseBase< std::remove_reference_t<decltype(*dRes.ptr())> > const*,

276 = Ðmeasure_kernel;

277

278 // determine the configuration of shared memory

279 int shared_memory_size = deviceProp.sharedMemPerMultiprocessor - 1024;

280 int nEigVals = (shared_memory_size - expValMemSize) / eigValMemSize;

281 int smSize = expValMemSize + nEigVals * eigValMemSize;

282

283 cuCHECK(cudaFuncSetAttribute(m_kernel, cudaFuncAttributeMaxDynamicSharedMemorySize, smSize));

284 struct cudaFuncAttributes m_attr;

285 cuCHECK(cudaFuncGetAttributes(&m_attr, m_kernel));

286 shared_memory_size = m_attr.maxDynamicSharedSizeBytes;

287

288 int constexpr warpSize = 32;

291 nEigVals = 2;

292

293 smSize

295

297 << ", m_attr.maxThreadsPerBlock = " << m_attr.maxThreadsPerBlock

298 << ", requiredSmSize = " << requiredSmSize << ", smSize = " << smSize

299 << ", shared_memory_size = " << shared_memory_size << ", nEigVals = " << nEigVals

300 << ", deviceProp.sharedMemPerMultiprocessor = "

301 << deviceProp.sharedMemPerMultiprocessor << std::endl;

302 assert(nThread >= 1);

303 assert(nBlock >= 1);

304 assert(smSize <= shared_memory_size);

305

308 dSubSpace.ptr(), dAdjointBasis.ptr(), dmBodyOpSpace.ptr(), mBodyOpSpace.transEqDim(),

309 transEqClassRep, transPeriod, dWork.ptr());

310 cuCHECK(cudaGetLastError());

311 cuCHECK(cudaFree(transEqClassRep));

312 cuCHECK(cudaFree(transPeriod));

313

314 cuCHECK(cudaDeviceSynchronize());

315

316 res = dRes.get().template cast<double>().rowwise().sum();

317}

__global__ void ETHmeasure_kernel(Eigen::DenseBase< Derived1 > const *__restrict__ resPtr, Eigen::DenseBase< Derived2 > const *__restrict__ dEigValPtr, typename SubSpace< TotalSpace, Scalar >::Real dE, Eigen::DenseBase< Derived3 > const *__restrict__ dEigVecPtr, SubSpace< TotalSpace, Scalar > const *__restrict__ subSpacePtr, SparseCompressed< Scalar > const *__restrict__ adjointBasisPtr, ManyBodyOperatorSpaceBase< Derived4 > const *__restrict__ dmBodyOpSpacePtr, int const transEqDim, int const *__restrict__ transEqClassRep, int const *__restrict__ transPeriod, Eigen::DenseBase< Derived5 > *__restrict__ dWorkPtr)

Definition ETHmeasure.hpp:37

Definition mytypes.hpp:147

typename SubSpace< TotalSpace_, Scalar_ >::Real Real

Definition ETHmeasure.hpp:140

Definition OperatorSpace.hpp:213

Definition ObjectOnGPU.cuh:149

Definition HilbertSpace.hpp:568

cuCHECK(cudaFuncGetAttributes(&attr, MatrixElementsInSector))

Member Data Documentation

◆ m_expVal

template<class TotalSpace_ , typename Scalar_ >

|

private |

◆ m_mcAverage

template<class TotalSpace_ , typename Scalar_ >

|

private |

The documentation for this class was generated from the following file:

- /Users/shoki/GitHub/Locality/Headers/StatMech/ETHmeasure.hpp