Loading...

Searching...

No Matches

Public Types |

Public Member Functions |

Static Public Attributes |

Private Types |

Private Member Functions |

Private Attributes |

List of all members

ObjectOnGPU< SparseCompressed< Scalar_t, Options_ > > Class Template Reference

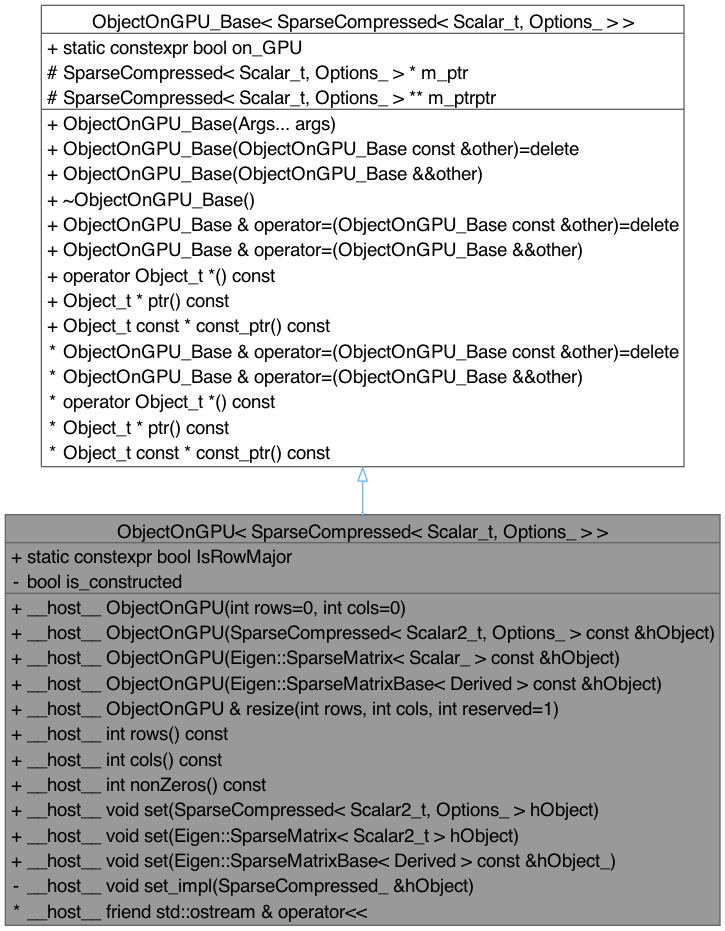

Inheritance diagram for ObjectOnGPU< SparseCompressed< Scalar_t, Options_ > >:

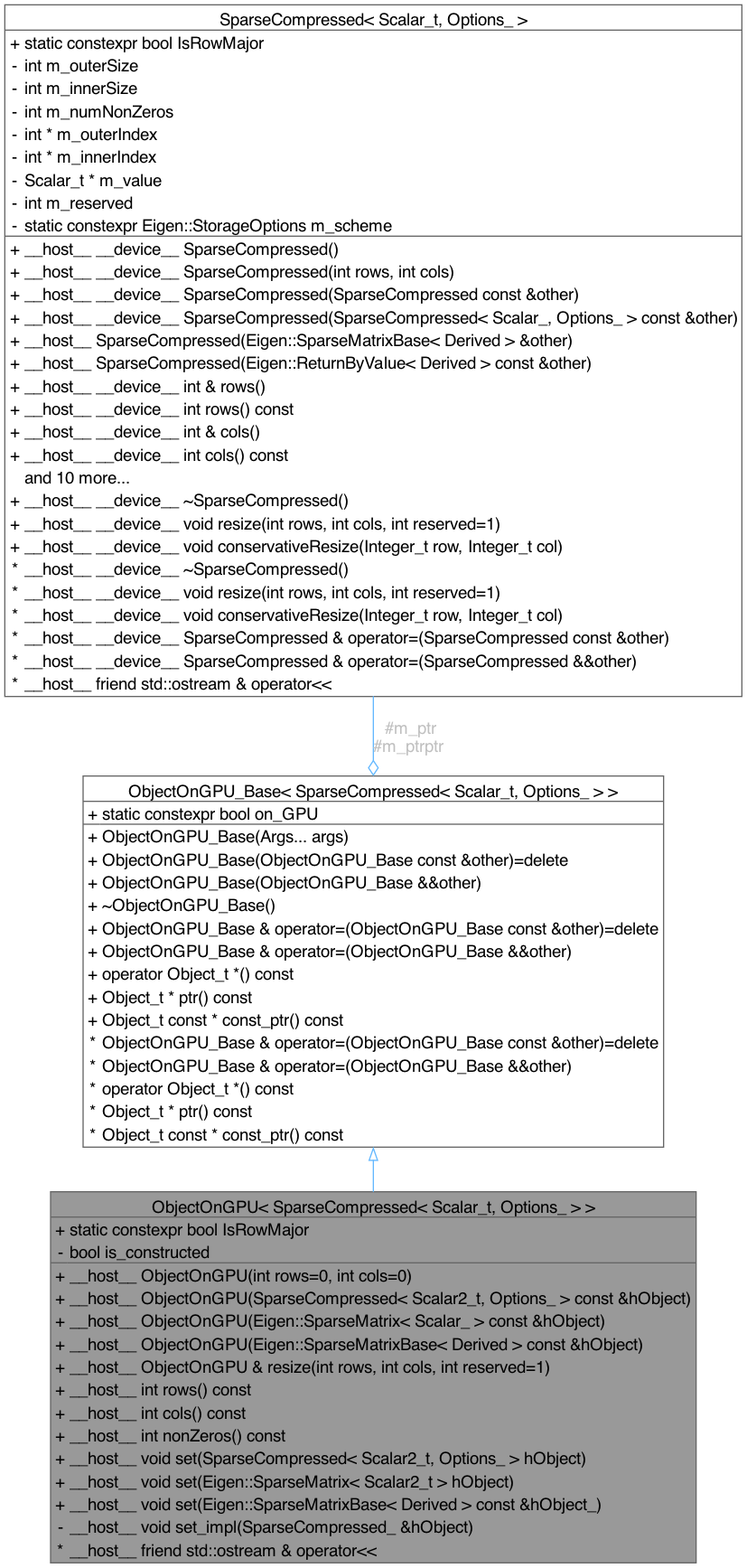

Collaboration diagram for ObjectOnGPU< SparseCompressed< Scalar_t, Options_ > >:

Public Types | |

| using | Scalar = Scalar_t |

| typedef Eigen::NumTraits< Scalar_t >::Real | RealScalar |

Public Member Functions | |

| __host__ | ObjectOnGPU (int rows=0, int cols=0) |

| template<typename Scalar2_t > | |

| __host__ | ObjectOnGPU (SparseCompressed< Scalar2_t, Options_ > const &hObject) |

| template<class Scalar_ > | |

| __host__ | ObjectOnGPU (Eigen::SparseMatrix< Scalar_ > const &hObject) |

| template<class Derived > | |

| __host__ | ObjectOnGPU (Eigen::SparseMatrixBase< Derived > const &hObject) |

| __host__ ObjectOnGPU & | resize (int rows, int cols, int reserved=1) |

| __host__ int | rows () const |

| __host__ int | cols () const |

| __host__ int | nonZeros () const |

| template<class Scalar2_t > | |

| __host__ void | set (SparseCompressed< Scalar2_t, Options_ > hObject) |

| template<class Scalar2_t > | |

| __host__ void | set (Eigen::SparseMatrix< Scalar2_t > hObject) |

| template<typename Derived > | |

| __host__ void | set (Eigen::SparseMatrixBase< Derived > const &hObject_) |

Public Member Functions inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > Public Member Functions inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > | |

| ObjectOnGPU_Base (Args... args) | |

| Default constructor for ObjectOnGPU_Base object. | |

| ObjectOnGPU_Base (ObjectOnGPU_Base const &other)=delete | |

| Default constructor. | |

| ObjectOnGPU_Base (ObjectOnGPU_Base &&other) | |

| Move constructor for ObjectOnGPU_Base object. | |

| ~ObjectOnGPU_Base () | |

| Destructor. | |

| ObjectOnGPU_Base & | operator= (ObjectOnGPU_Base const &other)=delete |

| Copy assignment operator (yet to be implmented) | |

| ObjectOnGPU_Base & | operator= (ObjectOnGPU_Base &&other) |

| Move assignment operator. | |

| operator Object_t * () const | |

| Object_t * | ptr () const |

| Object_t const * | const_ptr () const |

Static Public Attributes | |

| static constexpr bool | IsRowMajor = bool(Options_ == Eigen::RowMajor) |

Static Public Attributes inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > Static Public Attributes inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > | |

| static constexpr bool | on_GPU |

Private Types | |

| using | Object_t = SparseCompressed< Scalar_t, Options_ > |

Private Member Functions | |

| template<class SparseCompressed_ > | |

| __host__ void | set_impl (SparseCompressed_ &hObject) |

Private Attributes | |

| bool | is_constructed = false |

Friends | |

Operator overloads | |

| __host__ friend std::ostream & | operator<< (std::ostream &os, ObjectOnGPU const &sparseMatOnGPU) |

Additional Inherited Members | |

Protected Types inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > Protected Types inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > | |

| using | Object_t = SparseCompressed< Scalar_t, Options_ > |

Protected Attributes inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > Protected Attributes inherited from ObjectOnGPU_Base< SparseCompressed< Scalar_t, Options_ > > | |

| SparseCompressed< Scalar_t, Options_ > * | m_ptr |

| SparseCompressed< Scalar_t, Options_ > ** | m_ptrptr |

Member Typedef Documentation

◆ Object_t

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

private |

◆ RealScalar

template<typename Scalar_t , Eigen::StorageOptions Options_>

| typedef Eigen::NumTraits<Scalar_t>::Real ObjectOnGPU< SparseCompressed< Scalar_t, Options_ > >::RealScalar |

◆ Scalar

template<typename Scalar_t , Eigen::StorageOptions Options_>

| using ObjectOnGPU< SparseCompressed< Scalar_t, Options_ > >::Scalar = Scalar_t |

Constructor & Destructor Documentation

◆ ObjectOnGPU() [1/4]

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

inline |

__host__ int rows() const

Definition MatrixUtils.cuh:638

__host__ int cols() const

Definition MatrixUtils.cuh:649

Definition ObjectOnGPU.cuh:40

◆ ObjectOnGPU() [2/4]

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<typename Scalar2_t >

|

inline |

608 : ObjectOnGPU(0, 0) {

609 debug_constructor_printf(1);

610 this->set(hObject);

611 }

__host__ void set(SparseCompressed< Scalar2_t, Options_ > hObject)

Definition MatrixUtils.cuh:673

Definition ObjectOnGPU.cuh:149

◆ ObjectOnGPU() [3/4]

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<class Scalar_ >

|

inline |

614 : ObjectOnGPU(0, 0) {

615 debug_constructor_printf(2);

616 const_cast<Eigen::SparseMatrix<Scalar_>*>(&hObject)->makeCompressed();

617 this->set(hObject);

618 }

◆ ObjectOnGPU() [4/4]

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<class Derived >

|

inline |

Member Function Documentation

◆ cols()

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

inline |

649 {

650 int* dRowsPtr = nullptr;

652 SparseCompressed_cols_kernel<<<1, 1>>>(this->m_ptr, dRowsPtr);

653 cuCHECK(cudaPeekAtLastError());

654 cuCHECK(cudaDeviceSynchronize());

655 int res;

657 cuCHECK(cudaFree(dRowsPtr));

658 return res;

659 }

SparseCompressed< Scalar_t, Options_ > * m_ptr

Definition ObjectOnGPU.cuh:42

cuCHECK(cudaFuncGetAttributes(&attr, MatrixElementsInSector))

◆ nonZeros()

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

inline |

◆ resize()

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

inline |

◆ rows()

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

inline |

◆ set() [1/3]

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<class Scalar2_t >

|

inline |

680 {

682 hObject.makeCompressed();

683 this->set_impl(hObject);

684 }

__host__ void set_impl(SparseCompressed_ &hObject)

Definition MatrixUtils.cuh:732

debug_print("# Determining GPU configuration.")

◆ set() [2/3]

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<typename Derived >

|

inline |

687 {

689 Eigen::SparseMatrix<Scalar> hObject(hObject_.template cast<Scalar>());

690 this->set(hObject);

691 }

◆ set() [3/3]

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<class Scalar2_t >

|

inline |

◆ set_impl()

template<typename Scalar_t , Eigen::StorageOptions Options_>

template<class SparseCompressed_ >

|

private |

733 {

734 using Scalar2_t = typename SparseCompressed_::Scalar;

736 << hObject.nonZeros() << "\n");

737 this->resize(hObject.rows(), hObject.cols(), hObject.nonZeros());

739 cuCHECK(cudaPeekAtLastError());

740 cuCHECK(cudaDeviceSynchronize());

741

742 int* dSrcOuterIndex;

743 int* dSrcInnerIndex;

744 Scalar* dSrcValueIndex;

748

749 cuCHECK(cudaMemcpy(dSrcOuterIndex, hObject.outerIndexPtr(),

750 sizeof(int) * (hObject.outerSize() + 1), cudaMemcpyHostToDevice));

752 cudaMemcpyHostToDevice));

753 if constexpr(std::is_same_v<Scalar, Scalar2_t>) {

755 cudaMemcpyHostToDevice));

756 }

757 else {

758 std::vector<Scalar> hSrcValueIndex(hObject.nonZeros());

759 for(int j = 0; j != hSrcValueIndex.size(); ++j) hSrcValueIndex[j] = hObject.valuePtr()[j];

760 cuCHECK(cudaMemcpy(dSrcValueIndex, hSrcValueIndex.data(),

762 }

763

767 int const numElem = (hObject.outerSize() + 1) + hObject.nonZeros();

770

772 SparseCompressed_set_kernel<<<nBlock, nThread>>>(this->m_ptr, dSrcOuterIndex, dSrcInnerIndex,

773 dSrcValueIndex);

774 cuCHECK(cudaPeekAtLastError());

775

776 cuCHECK(cudaFree(dSrcOuterIndex));

777 cuCHECK(cudaFree(dSrcInnerIndex));

778 cuCHECK(cudaFree(dSrcValueIndex));

779}

__global__ void SparseCompressed_set_kernel(SparseCompressed< Scalar_t, Options_ > *objPtr, int *srcOuterIndexPtr, int *srcInnerIndexPtr, Scalar_t *srcValuePtr)

Definition MatrixUtils.cuh:577

Definition mytypes.hpp:147

SparseCompressed< Scalar_t, Options_ > Object_t

Definition MatrixUtils.cuh:597

__host__ ObjectOnGPU & resize(int rows, int cols, int reserved=1)

Definition MatrixUtils.cuh:631

Friends And Related Symbol Documentation

◆ operator<<

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

friend |

696 {

697 SparseCompressed sparseMat;

698 sparseMat.resize(sparseMatOnGPU.rows(), sparseMatOnGPU.cols(),

699 sparseMatOnGPU.nonZeros());

700 sparseMat.setNonZeros(sparseMatOnGPU.nonZeros());

701 int* srcOuterIndexPtr;

702 int* srcInnerIndexPtr;

703 Scalar* srcValuePtr;

707

708 SparseCompressed_copyData_kernel<<<1, 1>>>(sparseMatOnGPU.m_ptr, srcOuterIndexPtr,

709 srcInnerIndexPtr, srcValuePtr);

710 cuCHECK(cudaPeekAtLastError());

711

718

719 cuCHECK(cudaFree(srcOuterIndexPtr));

720 cuCHECK(cudaFree(srcInnerIndexPtr));

721 cuCHECK(cudaFree(srcValuePtr));

722

723 os << sparseMat;

724 return os;

725 }

Definition MatrixUtils.cuh:280

__host__ __device__ int * innerIndexPtr() const

Definition MatrixUtils.cuh:421

__host__ __device__ void resize(int rows, int cols, int reserved=1)

Definition MatrixUtils.cuh:353

__host__ __device__ int & setNonZeros(int input)

Definition MatrixUtils.cuh:417

__host__ __device__ int * outerIndexPtr() const

Definition MatrixUtils.cuh:420

__host__ __device__ Scalar_t * valuePtr() const

Definition MatrixUtils.cuh:422

Member Data Documentation

◆ is_constructed

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

private |

◆ IsRowMajor

template<typename Scalar_t , Eigen::StorageOptions Options_>

|

staticconstexpr |

The documentation for this class was generated from the following file:

- /Users/shoki/GitHub/Locality/Headers/MatrixUtils/MatrixUtils.cuh